The hearing aid device market, particularly the over-the-counter (OTC) segment, is predicted to expand significantly in the coming years. In 2022, the The Food and Drug Administration (FDA) established a new category of OTC hearing aids, allowing consumers to purchase devices without a medical exam, prescription, or audiologist visit. This regulatory change has accelerated the use of Bluetooth®-enabled OTC hearing devices, with projections indicating a tenfold increase in shipments by 2028.The hearing aids of tomorrow will track and even predict aspects of both your physical and mental health, whether or not you’re one of the 55 million Americans projected to have hearing loss by 2030. Moreover, as the population ages and the incidence of hearing loss continues to rise, the importance of advanced, feature-rich hearing aids in improving quality of life is more important than ever.

Bluetooth Low Energy (LE) technology allows manufacturers to design wireless, connected, and personalized hearing devices. This technology has the potential to provide assistance to 1.5+ billion people worldwide living with some degree of hearing loss, a number projected to grow up to 2.5 billion, emphasizing the need for advanced hearing aid technology.

Key Challenges in Hearing Aid Design

To fully appreciate the transformative potential of LE Audio in hearing aids, it is essential to understand the key challenges and requirements involved in developing these sophisticated devices. Hearing aid design offers a unique set of challenges and requirements that must be carefully balanced to deliver optimal performance, connectivity, and user experience.

The latest hearing aids utilize digital signal processing (DSP) algorithms to filter, shape, and enhance incoming audio signals based on the user’s specific hearing profile and surrounding acoustic environment. These algorithms must provide features like noise reduction, feedback cancellation, directional beamforming, and sound environment classification, while maintaining low latency and high audio fidelity.

There is also the need for connectivity and interoperability between a range of devices, such as phones, computers, wearables, and more. Bluetooth is the preferred wireless standard for hearing aids due to its ubiquity, relatively low power consumption and support for high-quality audio streaming.

Integrating Bluetooth connectivity into devices has become more intricate, as the size of hearing aids leaves little room for antennas, radios, and other components required for wireless communication. Engineers must carefully design and optimize antenna geometries, placement, and materials to achieve optimal signal strength and stability while minimizing interference from other electronic devices or the user’s body.

Moreover, switching between Bluetooth modes, such as streaming audio from a smartphone or computer, connecting to a remote microphone, or communicating with a smartphone app for configuration and control must be seamless. A reliable Bluetooth stack is required for managing multiple connections, prioritizing audio streams, and reconnecting when a signal is lost. Hearing aids must be compatible with a broad range of devices and operating systems, a challenging task, given the diversity of the Bluetooth ecosystem.

Hearing aids must also prioritize size, weight, and power constraints to maximize user comfort and convenience. As all-day wearable devices, hearing aids need to be lightweight and discreet, fitting comfortably behind or inside the ear. The use of miniaturized components is necessary, including batteries, microphones, speakers, and processors, that can deliver high performance within an extremely limited space.

Battery life is another key consideration, as users expect their devices to last a full day on a single charge—another challenge due to the power-intensive nature of wireless connectivity, audio processing, and other features. To achieve multi-day battery life, manufacturers must carefully select each component for ultra-low power consumption, often using specialized low-power chipsets which combine efficient processing cores with dedicated hardware accelerators for audio DSP and wireless connectivity.

The Role of MCUs in Hearing Aid Applications

The microcontroller unit (MCU) is the central “brain” of a modern hearing aid, responsible for managing all aspects of the device’s operation. One of the most critical functions of the MCU is to provide dedicated hardware acceleration for audio digital signal processing (DSP) tasks.

Hearing aids utilize algorithms for noise reduction, feedback cancellation, beamforming, and sound classification—computationally-intensive tasks that require significant processing power to run while consuming minimal battery power. By offloading such demanding tasks to specialized DSP hardware, an MCU enables complex audio processing to be performed with much lower power consumption compared to running them on the main CPU.

Integrated wireless connectivity is another essential feature of MCUs in hearing aid designs. Today’s hearing aids go beyond the ear. They connect to our phones through Bluetooth, even monitoring our steps and exercise, but the hearing aids of tomorrow aim to do even more. Like not only detect that you have fallen, but predict whether you’re more likely to trip and fall.

MCUs with integrated wireless connectivity systems that implement the BLE Audio standard allow hearing aids to communicate wirelessly with smartphones, tablets, and other Bluetooth-enabled devices. These MCUs handle all aspects of the wireless stack, including managing connections, data transmission, and audio streaming.

In addition to audio processing and wireless connectivity, hearing aid MCUs also incorporate sensor fusion. Since hearing aids incorporate multiple sensors like accelerometers for tap control, ear placement, and motion detection, the MCU can aggregate data from the sensors and run sensor fusion algorithms for advanced features like program switching based on the user’s environment or tap control for adjusting the volume and changing audio presets.

Some advanced hearing aids are starting to utilize artificial intelligence (AI) and machine learning (ML) for capabilities like enhanced speech recognition in noisy environments. When AI/ML models run on an MCU, they are typically accelerated by a dedicated neural processing unit (NPU). The MCU integrates sophisticated power management controllers to optimize energy efficiency by dynamically managing power to various subsystems, rapidly switching between active, idle, and sleep states to conserve battery life.

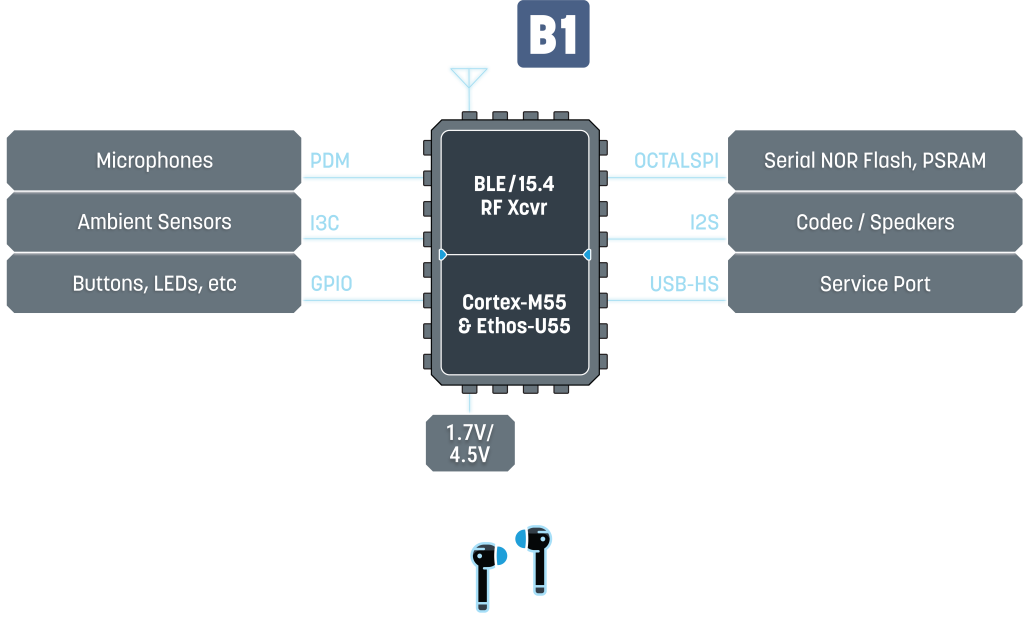

Block diagram of B1 Balletto MCUs in a LE audio application. Image credit: Alif

Alif Semiconductor’s Balletto MCUs: Breakthrough Solutions for Hearing Aids

Alif’s Balletto™ family of MCUs are purpose-built to address the unique challenges of hearables like TWS earbuds and hearing aids. Balletto MCUs deliver an unprecedented combination of powerful processing, wireless connectivity, hardware-accelerated AI/ML, ultra-low power consumption, and miniature chip-scale packaging.

The Balletto MCU includes an Arm® Cortex™-M55 CPU with Helium vector extension, an Ethos™-U55 NPU, integrated wireless protocol stack processing, and cybersecurity functions. Balletto fully supports the new LE- Audio capabilities, and it can also be used for 802.15.4 connectivity with its support for Thread and Matter.

Balletto MCUs enhance power efficiency, consuming as little as 1.5mA in Bluetooth receive mode and supporting a 700nA stop mode for extremely low sleep currents. Dynamic power management across eight independent domains and a 22uA/MHz run mode ensures optimal energy efficiency in all operating scenarios. The chip-scale package integrates all necessary components into a small footprint, minimizing PCB area and allowing for discreet designs.

Improving Speech Clarity in Noisy Environments

To appreciate the benefits of Alif’s Balletto MCUs, consider a common use case—enhancing speech clarity in noisy environments. Even with advanced hearing aids, users still struggle to understand speech in loud places like restaurants, outdoor spaces, or parties.

Balletto MCUs provide the performance and flexibility to implement an AI-based solution for the noisy speech problem. Multiple MEMS microphones strategically placed in the device capture sound from multiple directions, providing precise spatial information on the acoustic environment.

The Cortex-M55 CPU, with Helium vector extensions, accelerates front-end audio preprocessing, filtering, and beamforming to isolate speech from noise. The Ethos-U55 NPU runs state-of-the-art deep neural networks that have been trained on vast datasets of speech and noise samples, allowing the device to identify and separate the desired speech signal from noise sources. The neural networks can also be personalized to match a user’s hearing profile and adapted over time as their hearing changes.

Sophisticated signal processing, such as detecting background noise and trying to reduce it or detecting if there’s speech and enhancing it is made easier with digital signal processing techniques. Using artificial intelligence, especially deep neural networks. Balletto MCU algorithms dynamically adjust beamforming and noise reduction parameters, steering the microphone array to focus on the source while suppressing background noise. This “clean” speech is then re-mixed and played back to the user with minimal latency, providing a dramatic improvement in clarity. By performing all of the processing on-chip, the hearing aid delivers real-time performance while consuming less power, ensuring longer battery life.

Emerging BLE Applications: Auracast Assistive Listening

Auracast™, a broadcast capability of LE Audio, enables the creation of audio broadcast zones where a transmitter can send an audio stream to an unlimited number of Bluetooth receivers in close proximity. This technology has the potential to replace RF-based assistive listening systems with a flexible and cost-effective solution based on standard Bluetooth hardware.

One of the most promising applications for Auracast is in public spaces equipped with public address (PA) systems, such as airports, cinemas, lecture halls, and places of worship. By integrating transmitters into the PA system, these venues can broadcast high-quality audio directly to visitors’ Auracast-capable Bluetooth headsets for hearing aids. Users can simply join the broadcast zone and listen to the PA audio at their preferred volume, eliminating the need for expensive dedicated receivers and allowing them to use their own familiar devices.

Auracast also offers personalized assistive listening experiences in settings like banks, retail stores, and medical offices. For example, service counters can be equipped with Auracast transmitters that broadcast audio from the service representative’s microphone directly to a customer’s hearing aid. This allows for discreet, one-to-one communication without the need for raised voices or bulky assistive listening hardware. The customer can adjust the volume to their comfort level and engage in a natural conversation, even in noisy environments.

In venues with television sets, such as waiting rooms, gyms, restaurants, and transportation hubs, Auracast can be used to create “silent TV” experiences. The TV audio is broadcast via Auracast to nearby Bluetooth receivers, allowing users to listen through headsets or hearing aids without disturbing others. This avoids the need for closed captioning or muted displays, providing a more engaging and accessible viewing experience for all.

Auracast can also be used in group tour scenarios, such as museums, factories, convention centers, and sightseeing excursions. Tour guides can be equipped with Auracast transmitters that broadcast their voice directly to participants’ Bluetooth receivers, replacing their wired or RF-based tour guide systems. Visitors can use Auracast-enabled headsets or hearing aids to listen to the guide’s commentary, adjusting the volume to their preferred level. This allows for an immersive and personalized tour experience, as participants can wander freely while still hearing the guide clearly.

In multilingual events like international conferences, educational settings, or cultural events, Auracast can streamline the delivery of real-time translations or simultaneous interpretations. Interpreters can broadcast their translations via Auracast transmitters, allowing attendees to listen in their preferred language using their own Bluetooth receivers. This avoids the need for expensive multi-channel IR or RF systems and enables a more accessible experience.

As Auracast adoption grows and more users come to rely on their Bluetooth hearing aids for assistive listening, it will be essential to ensure the security and reliability of these systems. Hearing aid MCUs must implement cybersecurity features, such as secure boot, encrypted data storage, and tamper-resistant key management, to protect users’ privacy and prevent unauthorized access to devices. Alif’s Balletto MCUs, advanced hardware security features, are well-positioned to meet these challenges.

Wireless technology makes hearing aids adjustments simple and convenient . Image credit: Shutterstock

Conclusion

While audio can now be time-shifted, shared, shaped, translated, substituted, amplified, and filtered in ways that were not imaginable years ago, the future holds promise to further assist people with hearing loss, enhance audio in noisy environments, and provide private and/or customized listening experiences.

BLE-enabled MCUs, such as Alif’s Balletto family, integrate a low-power processor with BLE Audio connectivity, dedicated AI/ML features, and DSP acceleration into a compact SoC, eliminating size, power, and performance barriers that have slowed innovation in hearing aids for years.

Balletto MCUs also support Auracast, an emerging BLE audio technology that delivers additional consumer benefits. Ultimately, these solutions should make better hearing more accessible to people around the world living with hearing loss.

If you are interested in learning more about Alif Semiconductor’s Ensemble and Balletto families of MCUs, Alif offers evaluation kits, documentation, software & tools, and support resources. To discuss your specific application requirements, please contact Alif Semiconductor’s sales team online or email [email protected].