Benchmarking scores show conclusively that a microcontroller with a neural processing unit (NPU) performs AI/ML inferencing operations far better than an MCU with a CPU alone. Alif’s tests show that for speech recognition, for instance, a combination of an Ensemble MCU’s Arm Cortex-M55 CPU and Arm Ethos-U55 NPU performs inferencing around 800x faster and uses around 400x less energy per inference than an older Cortex-M CPU on its own.

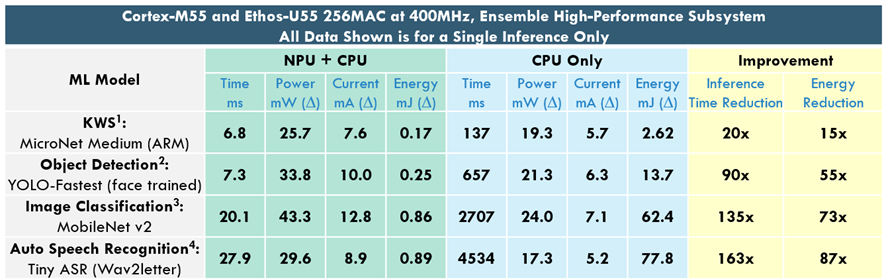

This table shows how much better a multi-core Ensemble MCU performs inferencing operations when using its CPU and NPU together than when the CPU operates alone, with the NPU disabled:

So this is clear: a microcontroller that is to perform AI/ML operations at the edge needs one NPU (or more than one), alongside one (or more) CPUs.

But does simply bolting an NPU on to an existing microcontroller system turn it into an edge AI MCU?

Absolutely not. And for two reasons.

The first is that the system needs to intelligently partition AI/ML workloads between the CPU and the NPU. This is made much easier if the CPU and the NPU share the same software development environment: OEMs do not want to retool their entire development infrastructure to work with a new architecture just for AI/ML workloads, necessitating the adoption of new toolchains and new instruction sets.

In the Ensemble and Balletto MCUs, the Cortex-M CPU and the Ethos NPU share a common Arm environment. In fact, the Ethos-U NPU is essentially a co-processor which integrates seamlessly with the Cortex-M CPU. The Arm Vela compiler will automatically split the ML workload between them, with 95% or more typically falling on the NPU.

(Helpfully, this enables the CPU to sleep or do other work while the ML inference is being resolved.)

The second reason why the NPU needs to be tightly paired with a CPU is because the production of fast, accurate inferences is a system-level operation – it does not depend only on the operation of the NPU.

In a typical AI application such as face recognition, the NPU applies image classification algorithms to an image of a face. But the image captured by the system’s camera might include irrelevant pixels, for instance of objects in the background. By excluding these pixels in advance, the NPU has less data to process, resulting in a faster resolution and less energy per inference.

Pre-treatment functions such as streamlining, curation, and contextualization are performed outside the NPU: in the Ensemble MCUs, capabilities such as the Helium vector extension for DSP functions and a graphics engine are integrated into the CPU. So a tightly paired CPU/NPU combination allows for an optimal division of data pre-treatment and inferencing operations, resulting in faster inferencing for a better user experience, and lower power consumption, for longer battery run-time.

That’s why an important Ensemble difference is tight pairing of an NPU and CPU.